Why Deterministic Systems Beat AI for Legal Document Assembly

The hype around Generative AI (LLMs) has led many to believe that software can now "write" contracts. This is a dangerous misunderstanding. LLMs are probabilistic—they guess the next word. Law is deterministic—specific facts must trigger specific rules.

This article explains why we use deterministic logic engines, not AI, for the core assembly of legal documents, and where AI actually fits in.

Key Concepts

Legal validity requires certainty, which pure AI cannot provide.

- Probabilistic vs. Deterministic — The difference between "probably right" (AI) and "logically necessary" (Code).

- Hallucination Risk — The tendency of AI to invent legal citations or clauses that sound good but do not exist.

- Auditability — The absolute requirement to be able to trace exactly why a specific clause appeared in a document.

The Hallucination Problem

If you ask an LLM to write a Non-Compete clause, it will write a beautiful one. But it might include a duration (5 years) that is statutorily void in your jurisdiction. The LLM doesn't "know" the statute; it just knows what non-competes usually look like.

A deterministic system knows that if Jurisdiction = CA, then Non-Compete = Void. There is no guessing.

Auditability

When a document is challenged in court, you need to testify to its creation. "The AI wrote it" is not a defense.

With a rules engine, we can prove: "This clause was included because the client answered 'Yes' to Question 4 and the asset value exceeded $5M." This logic trail is the shield against malpractice.

Conclusion

AI is a powerful tool for summarization, research, and first-draft correspondence. But for the operative language of legal agreements, we need the certainty of logic, not the creativity of a chatbot.

Our architecture uses AI to interface with humans, but Code to execute the law.

Frequently Asked Questions

Do you use AI at all?

Yes, for intake chatbots, summarizing meetings, and suggesting clause revisions—just not for the final assembly logic.

Will AI eventually replace logic engines?

Unlikely. The law is a rule-based system. It is more efficient to model it with rules than to train a neural net to mimic rules.

Is ChatGPT safe for lawyers?

Only if you verify every single output. Treating it as a drafter is negligent.

Sources & Further Reading

Read More Articles

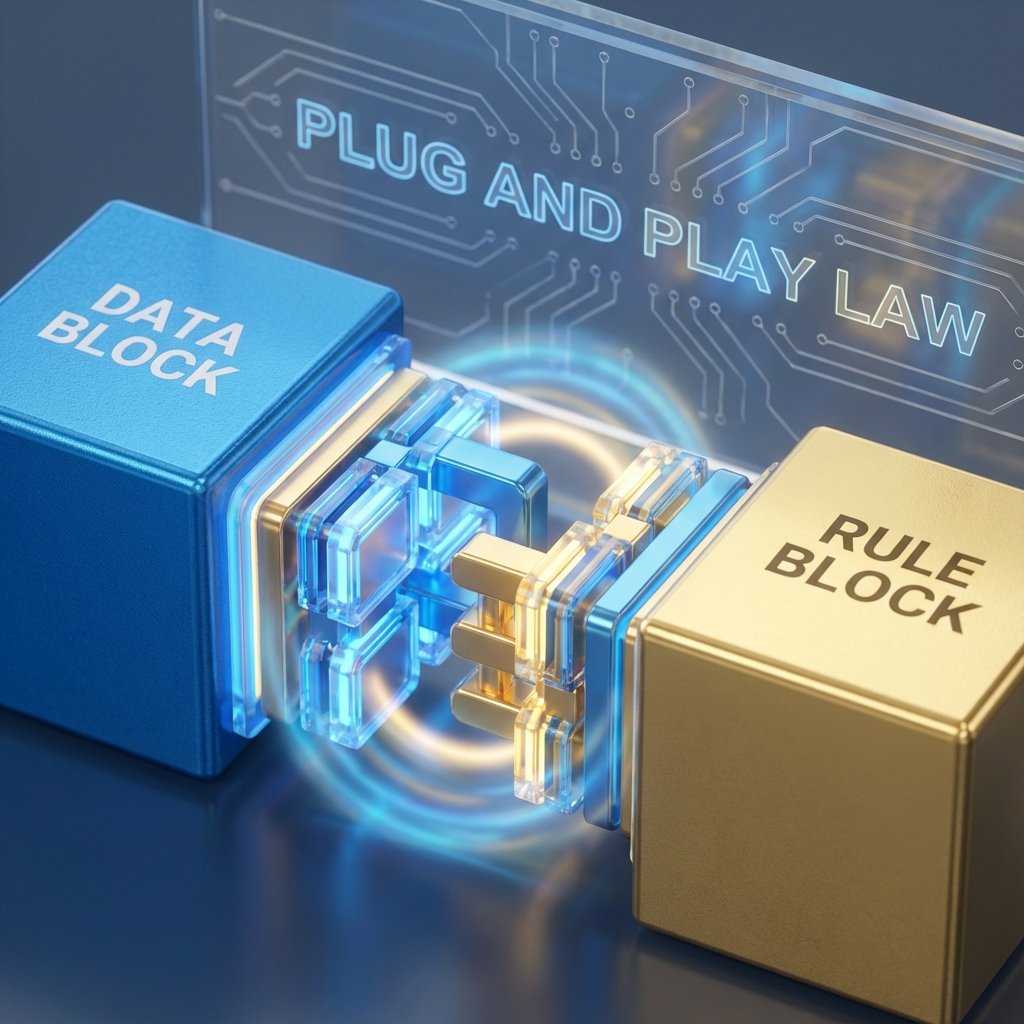

How four specialized components turn complex legal rules into deterministic, client-ready documents using the Velcro Model of Law.

Read Article

Ontology as Infrastructure

Why a legal ontology is not a taxonomy, and why it must behave more like infrastructure than metadata.

Read Article

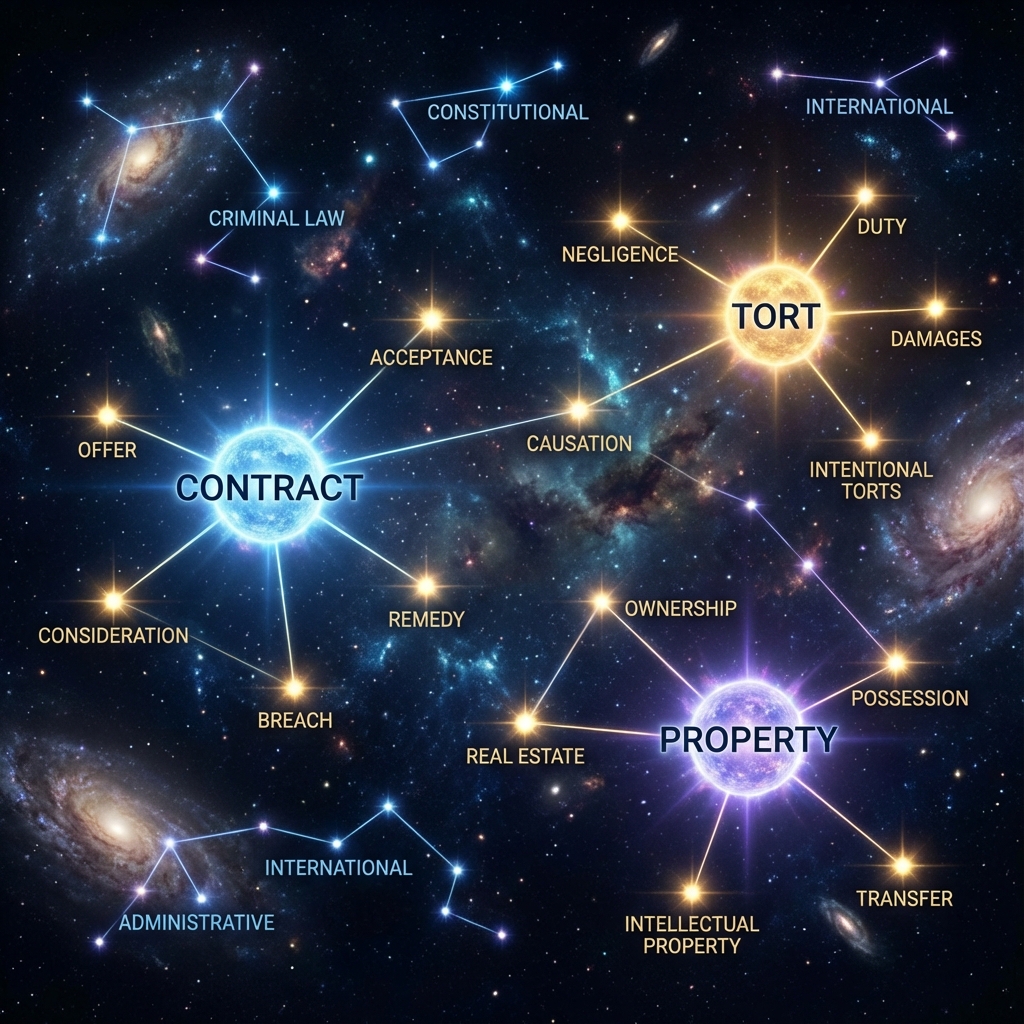

Mapping the Legal Universe

How we extract, filter, and map rules of law across statutes to build a navigable legal universe.

Read Article